Listening Guide: Exam 3

Jean-Claude Risset: Computer Suite for Little Boy (1968)

Risset (b. 1938, France) is one of the early pioneers of computer music. He studied music as a pianist and composer;

worked over three years with Max Matthews at Bell Laboratories on computer sound synthesis (1965-69); Pierre Boulez

hired Risset to set up and head the Computer Department at IRCAM (1975-79).

This work was realized in 1968 at Bell Laboratories. It is one of the first substantial musical works entirely synthesized by

computer: all the sounds used in the piece were produced with the Music V program (developed by Max Matthews, et al.).

This Computer Suite is excerpted from music originally composed as incidental music for the play, Little Boy by Pierre

Halet, which combined computer music with music for a chamber ensemble. The play is a fictionalized look at the

recurrent nightmares, guilt, and mental anguish experienced by one of the reconnaissance pilots after the Hiroshima

bombing. “Little Boy” refers to the A-bomb itself, with which the pilot personally identifies. Some of the play’s action is

“live” and some occurs only in the mind of the pilot.

Flight and Countdown follows the pilot’s reliving in dream the experience of the flight, ending with a (clearly audible) countdown to the release of the bomb. In addition to imitations of various instrumental timbres, using 1960’s state-of-the-art digital synthesis which was a big part of the research he and Matthews were pursuing, you will hear many glissando (or sliding pitch) gestures used to simulate the flight of the plane.

Jean-Claude Risset: Songes (1979) trans. “Dreams”

This work was realized in Paris at the Institut de Recherche et de Coordination Acoustique/ Musique (IRCAM) using a

variant of the Music V program. The original program (Max Matthews, et al.) was augmented to enable the processing of

digitized sounds, as well as digital sound synthesis. The work begins with these digitized sounds, extracted from recordings

of material from an earlier instrumental composition by Risset. These sounds are digitally mixed and processed, often to

produce spatial effects. A long-range organization of frequency can be heard in the work: at the beginning frequencies are

generally near the middle of the audio spectrum; a gradual expansion of the frequency component takes place, filling the

spectrum from low to high; after which the mid-range frequencies drop out, leaving only the lows and highs.

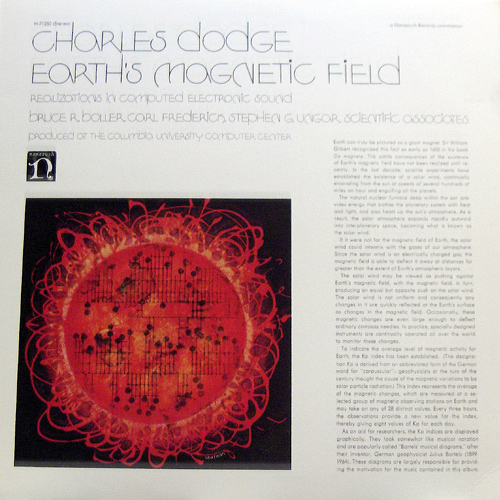

Charles Dodge: Earth’s Magnetic Field–Part I [1970] (2 excerpts) …………………………………………….. (Scrhader p. 156)

This is an early example of computer music. The “score” for the work is derived from Bartles’ Diagrams, which chart

measurements recording the fluctuations of the earth’s magnetic energy in a graphic representation not unlike traditional

music notation. In fact they are sometimes referred to as Bartels’ musical diagrams. Dodge translated the data for the

year 1961 into music, where the vertical axis is converted to pitch and the horizontal axis to duration. In Part One, the pitch

component is mapped to a diatonic major scale (7-notes per octave; do-re-mi-fa-so-la-ti-do, etc.) . It is monophonic (one

note at a time ) since Bartles’ measurements have only 1 value at a time. Complexity is introduced later in the work using

overlapping envelopes, complex timbres having audible high-pitched overtones, and by the addition of reverb.

John Chowning: Stria (1977) — def. “one of a series of parallel lines, bands, or grooves”

Chowning (b. 1934, New Jersey) studied composition with Nadia Boulanger in Paris and received a doctorate in composition from Stanford University. In 1964, while still a student, he set up the first on-line computer music system ever,

with help from Max Matthews of Bell Laboratories and David Poole of Stanford’s Artificial Intelligence Laboratory. In 1967

Chowning discovered the audio-rate linear frequency modulation algorithm. This breakthrough in synthesis allowed a very

simple yet elegant way of creating and controlling complex time-varying timbres. His work revolutionized digital sound

synthesis once Yamaha and Synclavier licensed the technology and packaged it for “non-computer jocks.”

Stria was begun in late 1975 at the Stanford University Center for Computer Research in Music and Acoustics (CCRMA) as a

commission for presentation at Luciano Berio’s exhibit of electronic music at IRCAM in 1977. Chowning was the pioneer of

linear frequency modulation (FM). This work, his third major work to use FM, makes consistent use of non-harmonic ratios

and non-tonal divisions of the frequency spectrum. In other words, it does not attempt to synthesize traditional sounding

instruments (though surely it was this aspect of FM’s power that initially attracted Yamaha to license the technology).

Instead, very gradually evolving clangorous bell-like sounds are used throughout the piece. Several levels of the piece, from

“microscopic” frequency ratios to overall form, make use of the Golden Section ratio (y/x = x/(x+y) or roughly .618).

Chowning has said, “Stria was the most fun piece I have ever composed. It was all with programming and that was

incredibly enlightening to me.” Unlike many of his works, Stria uses no random number generators. Instead, Chowning

wrote a SAIL–program (Stanford Artificial Intelligence Language) which would generate note lists and such from very

compact programming cells. Slight changes in a cell would result in different but deterministic results — meaning the

results would be the same if the identical program were run again.

Jon Appleton: Georganna’s Farewell (1975) ………………………………………………………………………… (Scrhader p.141-147)

In 1972, Appleton (b. 1939, Los Angeles) began developing the Dartmouth Digital Synthesizer with engineers Sydney

Alonso and Cameron Jones. This instrument was the direct predecessor or prototype of the Synclavier. The most striking

difference between the two was the lack of an organ- or piano-like keyboard on the earlier instrument. See Barry Schrader’s

Introduction to Electro-Acoustic Music, pages 141 -147, for a discussion of this instrument and its close relatives as well as a detailed discussion of Georganna’s Farewell.

Frank Zappa: The Girl with the Magnesium Dress (1984)

One of Frank’s early compositions created on his Synclavier (liner notes credit The Barking Pumpkin Digital Gratification

Consort), it was released on the album Perfect Stranger which also contained a number of Zappa’s instrumental

compositions conducted by Pierre Boulez, founder of IRCAM in Paris. Frank says it’s about “a girl who hates men and kills

them with her special dress. Its lightweight metal construction features a lethally pointed sort of micro-Wagnerian

breastplate. When they die from dancing with her, she laughs at them and wipes it off.” (?? OK Frank, whatever you say!)

Meanwhile, notice that the work contains an interesting mix of realistic, sample-based instrument simulations, for which the

later versions of the Synclavier were famous, along side of more artificial or synthetic sounds based on the original FM and

additive synthesis of the older original instrument. Note also the super-human speed and improvisatory “keyboard” style of

the material and its phrasing.

Patric Cohen: Drum Machine (1990)

Patric was an Illinois University doctoral student from Sweden when he composed this work. He writes the following:

The task in Drum Machine was to drum the instruments’s built -in expectations out of earshot and to machine the

unexpected results into a piece of music. The work was created in the composer’s home with a MIDI sequencer and

a Roland D-10 synthesizer, part of which can be used as a drum machine.

Note: I have included this as an example, to demonstrate that even back in the 1990’s , you didn’t necessarily need a whole

lot of fancy equipment to produce unusual and interesting work, just original ideas and a fresh approach to your

instrument. Beginning with ideas extremely familiar to anyone who’s ever heard a drum machine, Cohen has built a

surprising composition by pushing these ideas beyond the artificially imposed limits of conventional usage: for example

speeding up a “drum roll” until the frequency of “hits” enters the audio spectrum and is perceived as a pitch.

Paul Lansky: Notjustmoreidlechatter (1988)

Paul Lansky (b. 1944) went to High School of Music and Art, Queens College & Princeton University where he is currently

Professor of Music. He formerly played horn in the Dorian Wind Quintet.

Notjustmoreidlechatter was realized in 1988 using the computer music facilities at Princeton University. It is the third in a

series of chatter pieces in which thousands of unintelligible synthesized word segments are thrown (with relatively careful

aim) into what at first appears to be a complicated mess, but adds up to a relatively simple and straight forward texture.

There is a movement towards almost intelligible comprehension in the middle of the piece as the chattering comes “stage

front.” But then the illusion is lost and with it the hope of ever making any literal sense of it. As Lansky describes it, “All

that is left is G-minor, which is not a bad consolation.”

Wendy Carlos: That’s Just It (1986)

That’s Just It is from Carlos’s Beauty in the Beast CD. The liner notes contain the following curious bit of “propaganda”:

All the music and sounds heard on this recording were directly digitally generated. This eliminates all the

limitations of the microphone, the weak link necessary in nearly all other digital recording including those which

include “sampling” technologies.

The pitch material from this composition comes from a “super-just intonation” scale which Carlos calls the Harmonic

Scale. This is a just intonation scale having 144 notes per octave which allows for modulations to remain based on just

intonation in the new temporary key, or in another words, it allows free transposition of a just scale to occur without

retuning the synthesizer. The sounds are generated on her custom built Synergy digital synthesizers.

James Mobberley: Caution to the Winds (1990)

Mobberley (b. 1953) is Professor of Music at the University of Missouri-Kansas City and Composer-In-Residence of the

Kansas City Symphony. He has received a Rome Prize and a Guggenheim Fellowship.

Caution to the Winds was commissioned by the Missouri Music Teachers Association. It is the fifth in a series of

compositions for soloist and tape where only sounds derived from the solo instrument are used in the tape

accompaniment. In this piece piano sounds were recorded, then digitally sampled and processed on a Fairlight Series IIX

to complete the accompaniment. Serious memory limitations on the Fairlight imposed a strict limitation on the length of

the samples available to the composer. To Mr. Mobberley’s credit he solves the dilemma ingeniously with a powerful

composition whose frenetic energy and momentum leave no room for long samples, which might only have impeded the

flow of the energy anyway.

Mark Phillips: Elegy and Honk (2001)

The entire accompaniment was created using Symbolic Sound’s Kyma system, which consists of a graphic software interface

(running on a host computer) that communicates with a Capybara, which is essentially a box full of motorola DSP chips and

sample RAM outfitted with input/output jacks for audio and data. MIDI (Digital Performer) was used extensively to control

and synchronize the Kyma sounds. Refer to Study Guide Appendix for several Kyma screen images from this composition.

Not surprisingly given it’s title, this work has two highly contrasting movements. The entire accompaniment to the Elegy is

derived from a few English horn sounds — short notes in various registers, some “airy” key clicks, and a whoosh of air

rushing through the instrument without the reed in place. The dense clouds of sound in the background come from a

single very short English horn “blip” subjected to a process called granular synthesis, in which the original audio is exploded

into tiny fragments that are subjected to a wide range of transpositions and recombined into overlapping layers . A MIDI

breath controller is also used to shape and control some of the Kyma sounds.

There is a long historical tradition of lyrical and serene elegies, but throughout the early stages of my composition process,

a brooding, edgy, ominous undercurrent kept “insisting” so persistently it belonged in this elegy that I finally gave in to it.

While the work was premiered in August of 2001, the second performance of the Elegy took place a few weeks later on

September 13 at an outdoor ceremony held in response to the events of September 11. For both the performer and myself,

as well as many in the audience, the music was permanently redefined. The intensity and dark mood of the work seem

absolutely “right” for an elegy.

A long transition, serving as an introduction to the second movement, gradually and slyly alters the mood of the work

completely. This raucous movement expands on the palette of English horn source material to include a menagerie of

geese and duck sounds, joined by an old-fashioned bicycle horn that I analyzed and resynthesized in Kyma, using sine wave

additive synthesis ,so that it can be transposed over a wide range and still fit the driving rhythm of the movement. Granular

synthesis also plays a significant role in the “jam session” between the two main sections of the movement.

Live Performance of Electro–Acoustic Music Without Tape or CD Playback

Gary Nelson: Variations of a Theme (and a Process) of Frederic Rzewsky (1987)

Gary Nelson was the founder and director of the TIMARA center (Technology in Music and Related Arts) at Oberlin College,

where he is a professor. He is a former tuba player and has long been a pioneer in algorithmic composition, computer

interactivity, and artificial intelligence. The excerpts on this tape are from a live concert performance given at the Ohio

University (9/28/89). Nelson performs on his MIDI Horn, an instrument he designed and built which combines elements of

a brass (valve) instrument with a typewriter into a wind controller for MIDI synthesizers. The instrument has a trumpet

mouthpiece with an air pressure sensor; keys which allow 12 chromatic alterations of the pitch in a pattern derived from a

4–valve fingering chart (like many tubas) to be played by one hand; keys operated by the other hand for determining which

octave the instrument will sound in (again using a fingering pattern derived from brass instrument fingerings);

programmable joysticks to be operated by the thumbs; and a “shift” key which turns all the other keys into a hexadecimal

numeric key pad for sending non-musical data to his computer (a Macintosh) or to his bank of MIDI synthesizers (various

Yamaha FM tone generators derived from DX7 technology).

Nelson’s music is based on live, real-time interactivity with MIDI computer programs he has designed. No tape is used; all

of the sounds are under the control of the soloist (Nelson) and are generated in real time by various tone generators. The

nature and degree of control over his computer-generated “accompaniments” varies from one piece to the next. Though it

is not always the case in Nelson’s music, it is relatively easy in this piece to distinguish what music is being played in “real

time” by the soloist (i.e. the melodic theme itself and its variations) and what music is generated by the computer. The

computer-generated accompaniment is generally busier and more percussive than the theme. The pitches of the melodic

theme (unaccompanied at the beginning) are actually programmed into the computer, so the performer doesn’t need to

execute the fingerings for each new note of the theme; a simple articulation of the tongue tells the computer you want to

play the next note of the theme (pretty cool, huh!). Variations include transposition, inversion, retrograde, and retrograde

inversion, and are all initiated by various key combinations on the MIDI Horn. In addition, the performer can, at will,

directly influence many aspects of the accompaniment, such as volume and timbre, as well as subject it to the same sorts of

variations as the theme. The general character of the accompaniment — i.e. tempo, melodic intervals, number of

“percussion players” available — may not be freely altered during the course of the performance. Such changes would

generally require modifying the specific program for the piece beforehand.

Russell Pinkston: Lizamander (2004) for flute and Max/MSP — with Elizabeth McNutt, flute

Russell Pinkston (b. 1948) is a composer, author/researcher/teacher in the field of electro-acoustic music, and a professor of

Music at the University of Texas. Lizamander (composed for the soloist on this recording) is the second in a series of

works for solo acoustic instruments and Max/MSP. The computer captures material played by the soloist during the

performances and uses that material (as well as some prerecorded sounds) to generate a rhythmic accompaniment. The

computer track the pitch of the flute throughout, and uses this information to follow a preset list of cues, which trigger a

variety of real-time processing techniques, including time stretching, pitch shifting, and harmonization. Refer to Study

Guide Appendix for Max/MSP screen images.

Jomenico: Iteration 31 (2004) (Jomenico = Margaret Schedel, John P. Young, and Nick Collins)

“We are sitting in a room, indifferent to everyone but the now. We are distorting the sound of Alvin Lucier’s voice, and we

are going to fray and hack into the gloom again and again, until the recontextualised frequencies can bloom and be a force

themselves … so that any semblance of his piece, with perhaps the deception of schism, is enjoyed. What you will hear

then, are the supernatural resonant sequences of the tune articulated by beats. We regard this activity not so much as a

demonstration of a creative act, but more as a way to groove out any regularities we geeks might have.”

The trio performed this work on live on stage with three laptop computers at the SEAMUS 2004 National Conference. John was running Ableton Live, Margaret was running Max/MSP, and Nick was running SuperCollider.